How Canva Survived a 1.5M Request-Per-Second Traffic Spike and What It Teaches About Modern Infrastructure

When Canva faced an unprecedented 1.5 million requests per second, their infrastructure was put to the ultimate test. This traffic spike revealed critical insights about modern system architecture, load balancing, and scalability strategies that every tech company should understand.

Introduction

When your system suddenly receives 1.5 million requests per second, 3X your normal peak load , you learn very quickly whether your infrastructure can handle the unexpected. For Canva's engineering team, November 12, 2024, became an intensive 52-minute masterclass in modern system resilience, CDN dependencies, and the cascading effects that can bring down even well-architected platforms.

The incident wasn't just a technical hiccup. With over 270,000 users waiting for a single JavaScript file to load, and Canva's entire platform unavailable for nearly an hour, the stakes were measured in user trust, business continuity, and millions of design projects left hanging in digital limbo.

What makes this story particularly valuable isn't just the dramatic scale of the traffic surge, but how a perfect storm of seemingly unrelated issues, a CDN network routing problem, a telemetry library bug, and an asset loading bottleneck, combined to create a system failure that bypassed traditional safeguards. The Canva team's transparent post-mortem reveals critical insights about building truly resilient infrastructure in our interconnected digital ecosystem.

The Perfect Storm: When Multiple Systems Fail Simultaneously

According to the Canva engineering team, the incident began as what should have been a routine deployment. Canva deploys their editor multiple times daily, automatically publishing over 100 static assets to AWS S3 and serving them through Cloudflare's CDN. On any typical day, this process runs seamlessly with built-in canary systems designed to catch and abort problematic deployments.

But November 12th was different. At exactly the moment Canva deployed their new editor version, Cloudflare experienced network latency issues between their Singapore and Ashburn data centers. The culprit was a stale traffic management rule that routed IPv6 traffic over public transit instead of Cloudflare's private backbone, creating packet loss rates reaching 66% at peak.

The timing couldn't have been worse. One critical JavaScript file, responsible for Canva's object panel feature, became caught in this network bottleneck. Instead of loading in seconds, it took 20 minutes to complete, with the 90th percentile time-for-first-byte increasing by over 1,700%.

Here's where Cloudflare's cache streaming technology, normally a performance optimization, became a critical vulnerability. The system consolidated all requests for the slow-loading asset into a single stream, meaning over 270,000 user requests, primarily from Southeast Asian users, were all waiting on the same file.

The Moment Everything Collapsed

When that JavaScript file finally loaded after 20 minutes, something unprecedented happened. All 270,000+ pending requests completed simultaneously, triggering what the Canva team describes as a "thundering herd" effect. Users across Southeast Asia suddenly had access to the JavaScript needed to load the object panel, and their browsers made API calls to Canva's systems all at once.

The result was 1.5 million requests per second hitting Canva's API Gateway, 3X their typical peak load. Under normal circumstances, this might have been manageable. But a hidden performance bug in Canva's telemetry library had been slowly degrading their system's ability to handle concurrent requests.

The bug was subtle but devastating: changes to their telemetry code caused metrics to be re-registered repeatedly under a lock, creating thread contention in their event loop model. As traffic spiked, this lock contention significantly reduced each API Gateway task's maximum throughput just when they needed peak performance.

The cascading failure was swift and complete. API Gateway tasks couldn't process requests fast enough, causing a backlog. Load balancers opened new connections to already overloaded tasks, increasing memory pressure. The Linux Out of Memory Killer terminated containers faster than autoscaling could replace them, ultimately bringing down the entire system within two minutes.

Crisis Response: How to Stop a Digital Avalanche

When your entire platform goes down during peak usage hours, every second counts. The Canva team's response reveals both the complexity of modern incident management and the critical importance of having nuclear options available.

Their first instinct was logical but ineffective: scale up the API Gateway tasks. New tasks would spin up, immediately become overwhelmed by the ongoing traffic surge, and get terminated just as quickly. Manual scaling couldn't outpace the failure cycle.

The breakthrough came at 9:29 AM UTC when they made a dramatic decision: block all traffic at the CDN level. Using Cloudflare's firewall rules, they temporarily cut off all access to canva.com, redirecting users to a status page. This nuclear option gave their systems breathing room to stabilize without the pressure of incoming requests.

The gradual restoration process was equally strategic. Rather than opening the floodgates all at once, they implemented geographical traffic shaping, starting with Australian users under strict rate limits and incrementally expanding to other regions. This measured approach ensured they could maintain stability while scaling back up to full capacity.

The Hidden Technical Debt That Made Everything Worse

Perhaps the most sobering aspect of this incident was that the telemetry bug contributing to the cascade failure had already been identified and fixed. The patch was literally in Canva's release pipeline the day of the incident, but the team had underestimated its impact and didn't prioritize expedited deployment.

This reveals a critical lesson about technical debt and performance regressions. The telemetry issue seemed minor under normal conditions, just a small performance degradation that wasn't immediately visible. But under extreme load, it became the difference between weathering a traffic spike and complete system failure.

The bug specifically affected Canva's event loop model, where code running on event loop threads must never perform blocking operations. When metrics were re-registered under lock during high traffic, it created the exact kind of blocking behavior that their architecture was designed to avoid.

Business Impact and Recovery Metrics

The 52-minute outage had immediate and measurable business consequences. With canva.com completely unavailable during peak usage hours, millions of users across multiple time zones couldn't access their design projects, collaborate with teammates, or create new content.

The geographic concentration of the initial problem, primarily affecting Southeast Asian users due to the Cloudflare routing issue, demonstrates how global infrastructure dependencies can create uneven impact patterns. What started as a regional CDN problem cascaded into a worldwide platform outage.

The incident also revealed gaps in user communication. Initially, users saw generic browser error pages rather than informative status updates, creating confusion about whether the problem was local or systemic. The team's decision to redirect traffic to a proper status page came only after implementing the traffic block.

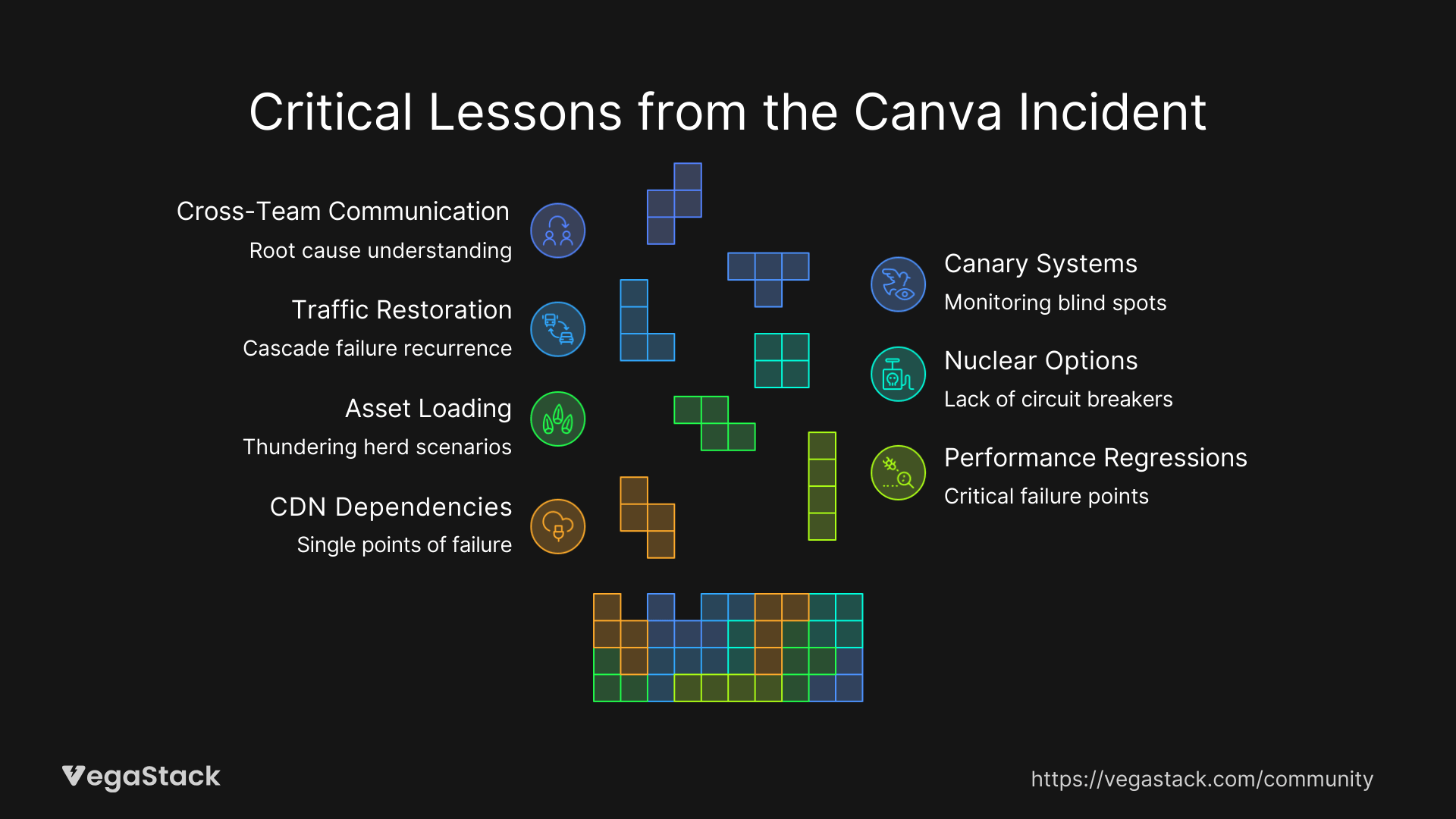

7 Critical Lessons for Modern Infrastructure

The Canva incident offers several transferable insights for organizations building resilient systems at scale:

1. CDN Dependencies Are Single Points of Failure: Even with robust internal architecture, external CDN issues can create cascading failures. Building monitoring and fallback strategies for CDN performance is essential.

2. Performance Regressions Hide Until They Matter Most: Small performance bugs that seem manageable under normal load can become critical failure points during traffic spikes. Regular load testing should specifically target these edge cases.

3. Asset Loading Strategies Need Failure Modes: Consolidating requests for slow-loading assets can create thundering herd scenarios. Implementing timeouts and progressive degradation prevents users from getting stuck in infinite wait states.

4. Nuclear Options Save Systems: Having the ability to immediately block traffic at the CDN level provided the breathing room needed for recovery. Every system needs clearly defined circuit breakers.

5. Gradual Traffic Restoration Is Critical: The geographic and rate-limited approach to restoring service prevented a second cascade failure. Planning traffic restoration is as important as emergency shutdown procedures.

6. Canary Systems Have Blind Spots: Traditional error-rate monitoring missed this failure because requests didn't complete rather than failing with errors. Expanding canary metrics to include completion events provides better coverage.

7. Cross-Team Communication Protocols Matter: The collaboration with Cloudflare to understand the root cause routing issue was essential for preventing recurrence.

Building Anti-Fragile Systems

The most impressive aspect of Canva's response wasn't just their technical recovery, but their systematic approach to preventing similar failures. Their action plan addresses multiple layers of the stack, from infrastructure scaling and load shedding to improved deployment guardrails and enhanced monitoring.

They're implementing additional load shedding rules specifically designed to handle traffic patterns like the thundering herd scenario. They're expanding their canary release indicators to include page load completion events, not just error rates. And they're adding regular load testing that specifically targets the API Gateway's ability to handle traffic surges.

Perhaps most importantly, they're building detailed runbooks for traffic management during incidents. The ability to quickly implement geographical traffic shaping and progressive restoration turned a potential multi-hour outage into a 52-minute learning experience.

The Transparency Dividend

This incident report represents Canva's first public post-mortem, marking a shift toward radical transparency in how they handle system failures. By sharing detailed technical analysis alongside business impact, they're contributing valuable knowledge to the broader engineering community while demonstrating accountability to their users.

The decision to publish this level of detail, including the specific technical bugs, timeline of decisions, and collaboration with external vendors, sets a high bar for incident transparency. It transforms a system failure into a learning opportunity for the entire industry.

As modern applications become increasingly dependent on complex chains of microservices, CDNs, and third-party infrastructure, the lessons from Canva's 52-minute journey through system failure and recovery become essential reading for anyone building resilient digital systems at scale.