How LinkedIn Eliminated DNS Outages for 1 Billion Users: A Custom DNS Client Success Story

Discover how LinkedIn engineered a custom DNS solution to eliminate outages for 1 billion users worldwide. Learn their proven approach to DNS architecture, failover strategies, and performance optimization that achieved unprecedented reliability and scale for mission-critical infrastructure.

The Hidden Infrastructure Challenge That Could Break Everything

When you're serving over one billion users worldwide, every millisecond matters. But there's one critical piece of infrastructure that most organizations take for granted until it fails catastrophically: DNS resolution. According to the LinkedIn engineering team, their journey to building a bulletproof DNS client reveals how a seemingly simple system component can make or break global operations.

The stakes couldn't be higher. A DNS failure doesn't just slow down one application, it can cascade across entire systems, affecting every service that relies on domain name resolution. For LinkedIn's massive scale, even minor DNS hiccups translate to degraded user experiences for millions of professionals worldwide.

What makes this story particularly compelling is how LinkedIn's team transformed a routine infrastructure upgrade into a masterclass in resilient system design, ultimately processing millions of DNS queries per second with sub-millisecond latency while preventing multiple potential outages.

The Breaking Point: When Standard Solutions Can't Scale

For years, LinkedIn relied on the Name Service Cache Daemon (NSCD) for DNS caching across their infrastructure. Like many organizations, they assumed this standard solution would scale alongside their growth. They were wrong.

As LinkedIn's infrastructure expanded to support their growing user base, NSCD began showing critical weaknesses. The system suffered from poor visibility, when DNS issues occurred, engineers struggled to diagnose problems quickly. The lack of robust debugging tools turned routine troubleshooting into time-consuming investigations that could stretch for hours.

Even more concerning was NSCD's inability to handle the sophisticated reliability requirements of web-scale infrastructure. When upstream DNS servers experienced issues, NSCD couldn't intelligently route around problems or provide the granular control needed for different applications across LinkedIn's diverse service ecosystem.

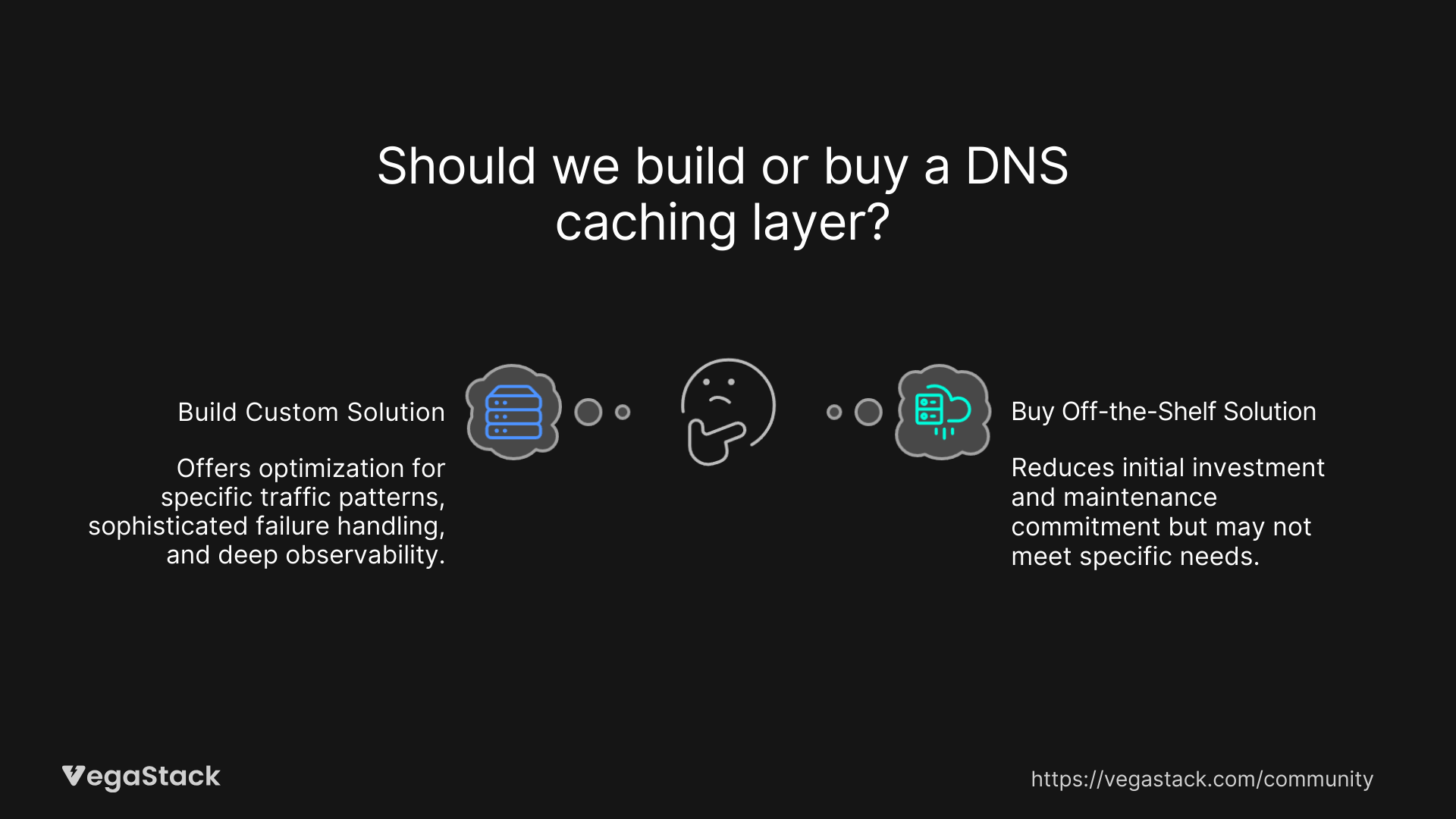

The LinkedIn team evaluated existing alternatives like systemd-resolved and Unbound, but none met their specific requirements for reliability, scalability, and operational visibility. They faced a critical decision: accept the limitations of available solutions or build something entirely new.

The Decision: Building vs. Buying at Enterprise Scale

Rather than compromise on their infrastructure requirements, LinkedIn's engineering team made the strategic decision to develop their own DNS Caching Layer (DCL). This wasn't a decision made lightly, building custom infrastructure components requires significant engineering investment and ongoing maintenance commitment.

The team recognized that DNS sits at the foundation of all their services. A custom solution would allow them to optimize for their specific traffic patterns, implement sophisticated failure handling, and gain the deep observability needed to maintain service levels for a billion users.

Their approach centered on three core principles: high availability through intelligent fault detection, operational simplicity to reduce maintenance overhead, and comprehensive visibility to enable proactive problem resolution.

Engineering a DNS Client for Billion-User Scale

Core Architecture and Smart Design Decisions

The LinkedIn team built DCL as a high-performance daemon that runs on every host in their infrastructure, listening on localhost:53 to seamlessly integrate with existing applications. The system supports both TCP and UDP protocols, ensuring robust handling of all DNS query types, including truncated responses that require protocol switching.

One of DCL's most innovative features is its adaptive timeout mechanism, inspired by RFC 6298. Instead of using fixed 5-second timeouts that can delay applications unnecessarily, DCL dynamically adjusts query timeouts based on real-time latency measurements. This means faster responses during normal operations and appropriate patience during network congestion.

The system implements intelligent exponential backoff to prevent overwhelming degraded upstream servers. When DNS servers start struggling, DCL automatically reduces query pressure, giving infrastructure time to recover rather than contributing to cascading failures.

Proactive Failure Detection and Recovery

DCL continuously monitors DNS query error rates to upstream servers, automatically isolating endpoints that exceed predefined failure thresholds. When servers are marked as unhealthy, DCL performs periodic health checks while immediately retrying failed queries against healthy servers.

This proactive approach has prevented multiple major incidents where DNS server misconfigurations left upstream infrastructure technically reachable but functionally broken. By shielding applications from these infrastructure breakdowns, DCL maintains service reliability even during backend failures.

Zero-Downtime Operations

The system supports dynamic configuration management, enabling real-time updates without service restarts. This capability allows LinkedIn's team to deploy configuration changes and code updates without any service interruption, critical for maintaining availability across thousands of hosts.

DCL implements a warm cache mechanism that proactively refreshes DNS records before they expire, preventing cache misses that could introduce latency spikes. The system also preserves cache state across restarts, ensuring that service maintenance doesn't trigger DNS query floods that could overwhelm upstream infrastructure.

Implementation: Rolling Out Critical Infrastructure Changes

Risk Mitigation Through Phased Deployment

Rolling out a new DNS client across LinkedIn's entire fleet represented enormous risk, a single bug could cause correlated failures across thousands of hosts simultaneously. The team designed a careful 3-phase deployment strategy to minimize this risk.

Phase one involved installing DCL across all hosts without affecting live traffic, allowing comprehensive validation of configurations and functionality while existing systems continued serving queries. The team used this phase to validate health checks, metrics collection, and alerting systems.

Phase two progressively shifted DNS traffic from NSCD to DCL, starting with small host subsets and expanding as confidence grew. This gradual migration allowed the team to monitor performance and catch any issues before they could impact significant portions of their infrastructure.

The final phase involved stopping NSCD once DCL was fully validated, completing the transition to their new DNS infrastructure.

Safety Mechanisms and Fallbacks

To ensure reliability during rollout, the team implemented an external health checker running alongside DCL on every host. This checker periodically validates DCL's health, automatically switching hosts to cacheless mode if issues are detected while alerting the site reliability team.

If failures persist for more than 15 minutes, the system temporarily restarts NSCD to restore caching functionality. However, according to the LinkedIn team, their rigorous testing and validation process was so thorough that they've never needed to invoke this fallback mechanism in production.

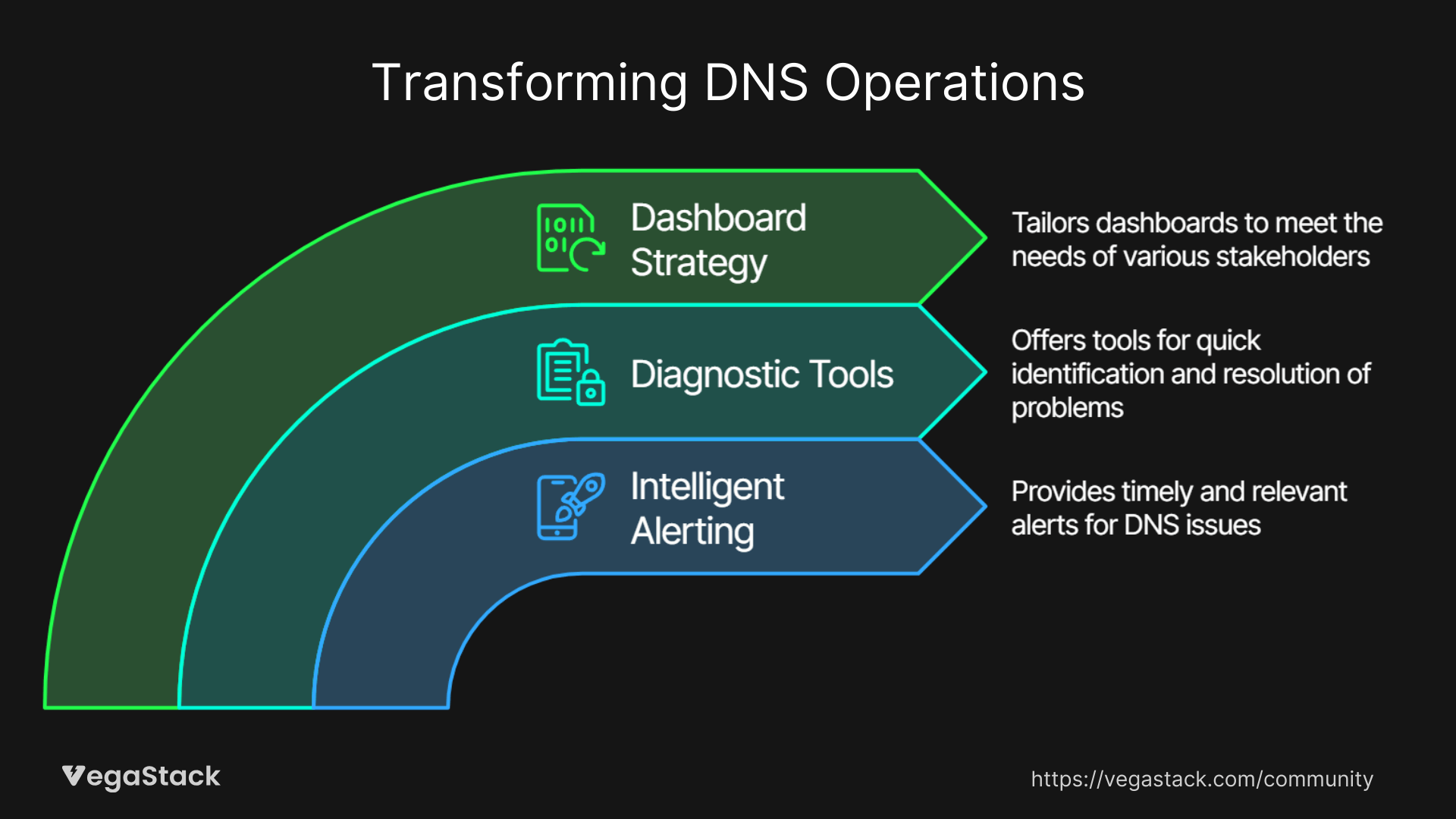

Transforming DNS Operations Through Advanced Observability

Intelligent Alerting That Actually Works

One of DCL's most valuable contributions has been solving the DNS alerting problem. Traditional DNS monitoring generates excessive noise due to the high variability in query patterns across different applications and hosts.

DCL's client-side metrics enable aggregate-based alerts that leverage fleet-wide patterns rather than isolated signals. For example, LinkedIn now triggers alerts when 5% of total DNS queries fail across their infrastructure, ensuring engineers are only paged for genuinely significant issues.

This approach has enabled proactive detection and mitigation of faulty upstream DNS servers before they could cause widespread application impact, demonstrating how better observability translates directly to improved reliability.

Diagnostic Tools for Rapid Problem Resolution

The system provides granular metrics that maintain efficiency while offering sufficient detail for troubleshooting. DCL leverages structured logging for detailed analysis, crucial for root-cause investigations when only specific queries fail during incidents.

A particularly innovative feature is DCL's tracing capability, which can be enabled on-demand to provide fine-grained visibility into DNS queries and responses. The system identifies which applications initiated specific queries by backtracking UDP ports, solving a critical gap in DNS troubleshooting.

Dashboard Strategy for Different Stakeholders

LinkedIn's team structured their DCL dashboards by tiers of granularity and use cases, making it easier to pinpoint issues at different organizational levels. Their dashboards cover high-level service indicators for executives, DNS upstream health for on-call engineers, latency metrics for performance optimization, per-host visibility for troubleshooting, and long-term trends for capacity planning.

This structured approach ensures quick access to actionable data, enabling faster incident resolution and better strategic decision-making across different roles and responsibilities.

Remarkable Results: The Business Impact of Better DNS

Performance Improvements at Scale

According to the LinkedIn engineering team, DCL now processes millions of DNS queries per second across their infrastructure, achieving sub-millisecond resolution latency for most requests. This represents a dramatic improvement over their previous NSCD-based system and directly translates to faster page loads and better user experiences for LinkedIn's billion-plus members.

The system's robust fault detection and isolation mechanisms have seamlessly masked multiple infrastructure failures, ensuring uninterrupted application performance even when backend DNS servers experience issues.

Operational Excellence Through Visibility

DCL's comprehensive observability has reduced LinkedIn's mean time to detect (MTTD) infrastructure outages from hours to minutes. This improvement in incident response capability means problems are identified and resolved before they can significantly impact user experience.

The system's application-level DNS visibility enables service owners to quickly diagnose connectivity issues, reducing the time engineers spend investigating network problems and increasing their ability to focus on feature development and other value-added activities.

Prevention of Major Incidents

One of DCL's most significant but often invisible contributions has been preventing outages before they occur. The system's validation layer has proactively caught multiple misconfiguration errors that could have caused service disruptions.

The intelligent failure isolation has prevented scenarios where DNS server misconfigurations could have propagated throughout LinkedIn's infrastructure, potentially affecting millions of users worldwide.

Key Lessons for Modern Infrastructure Teams

The Hidden Cost of Infrastructure Assumptions

LinkedIn's experience demonstrates how seemingly simple infrastructure components can become critical bottlenecks at scale. Organizations often assume standard solutions will grow with their needs, but web-scale operations require careful evaluation of every system component.

The decision to invest in custom DNS infrastructure paid dividends not just in performance, but in operational capability and incident prevention. Sometimes the most valuable engineering work happens in foundational systems that users never see directly.

Observability as a Strategic Investment

DCL's comprehensive monitoring and alerting capabilities have proven as valuable as its performance improvements. Better visibility enables proactive problem resolution, more efficient incident response, and strategic planning based on actual usage patterns rather than assumptions.

Modern infrastructure teams should prioritize observability not as an afterthought, but as a core requirement that influences architectural decisions from the beginning.

Risk Mitigation in Critical System Changes

The phased deployment approach and comprehensive safety mechanisms demonstrate how to manage risk when changing foundational infrastructure. The investment in gradual rollouts, health checking, and fallback mechanisms prevented potential outages that could have affected millions of users.

Organizations planning significant infrastructure changes should budget time and resources for careful deployment strategies, not just the technical implementation itself.

The Future of Resilient Infrastructure

LinkedIn's DNS client success story illustrates how thoughtful engineering investment in foundational systems can yield compound returns across an entire technology stack. By solving DNS reliability and visibility challenges, they've created a platform that enables faster development, more reliable operations, and better user experiences.

As organizations continue scaling their digital operations, the lessons from LinkedIn's DCL implementation become increasingly relevant. The combination of intelligent failure handling, comprehensive observability, and careful deployment practices provides a blueprint for upgrading critical infrastructure without compromising reliability.

The question for engineering leaders isn't whether your infrastructure will need similar upgrades, but whether you'll recognize the need before performance and reliability issues force your hand.