Security Metrics: How to Measure and Improve Your Security Posture with Data-Driven KPIs

Transform your security program with comprehensive metrics and data-driven KPIs. Learn how to measure security effectiveness, track improvement trends, and make informed decisions based on actionable security analytics that drive measurable improvements in your organization's security posture.

Introduction

"You can't improve what you don't measure", this fundamental principle becomes critically important when it comes to organizational security. As senior engineers at VegaStack, we've witnessed countless organizations struggle with security metrics, either tracking vanity metrics that provide little actionable insight or drowning in data without understanding what truly matters for their security posture.

The challenge isn't just collecting security data, it's selecting the right metrics, presenting them effectively, and translating trends into actionable improvements. We recently worked with a mid-sized financial services company that was spending $8,000 monthly on security tools but had no clear visibility into whether their investments were actually improving their security effectiveness.

In this comprehensive guide, we'll walk you through our proven methodology for implementing security metrics that provide real insights. You'll learn how to select meaningful KPIs, create actionable dashboards, and perform trend analysis that drives continuous security improvement. By the end, you'll have a framework for transforming your security program from reactive firefighting to proactive, data-driven protection.

The Problem: Flying Blind in Security Operations

The most frustrating conversation we have with security teams starts the same way: "We know we need better security, but we don't know if what we're doing is working". This uncertainty isn't just uncomfortable, it's dangerous and expensive.

Consider the scenario we encountered with a growing SaaS company. They had deployed multiple security tools, hired additional security staff, and implemented various policies, yet they couldn't answer basic questions: Were they detecting threats faster than 6 months ago? Had their incident response times improved? Were their security investments reducing actual risk?

Traditional approaches to security measurement often fail because they focus on easily quantifiable but ultimately meaningless metrics. Counting the number of alerts generated, patches applied, or security training sessions completed tells you about activity, not effectiveness. These vanity metrics create a false sense of progress while missing critical gaps in security posture.

The technical complexities compound this challenge. Security data lives in disparate systems, SIEM platforms, vulnerability scanners, endpoint detection tools, identity management systems, and cloud security platforms. Each tool speaks its own language, uses different severity classifications, and operates on different timelines. Aggregating this data into meaningful insights requires both technical expertise and strategic thinking about what really matters for organizational security.

Solution Framework: Building a Comprehensive Security Metrics Program

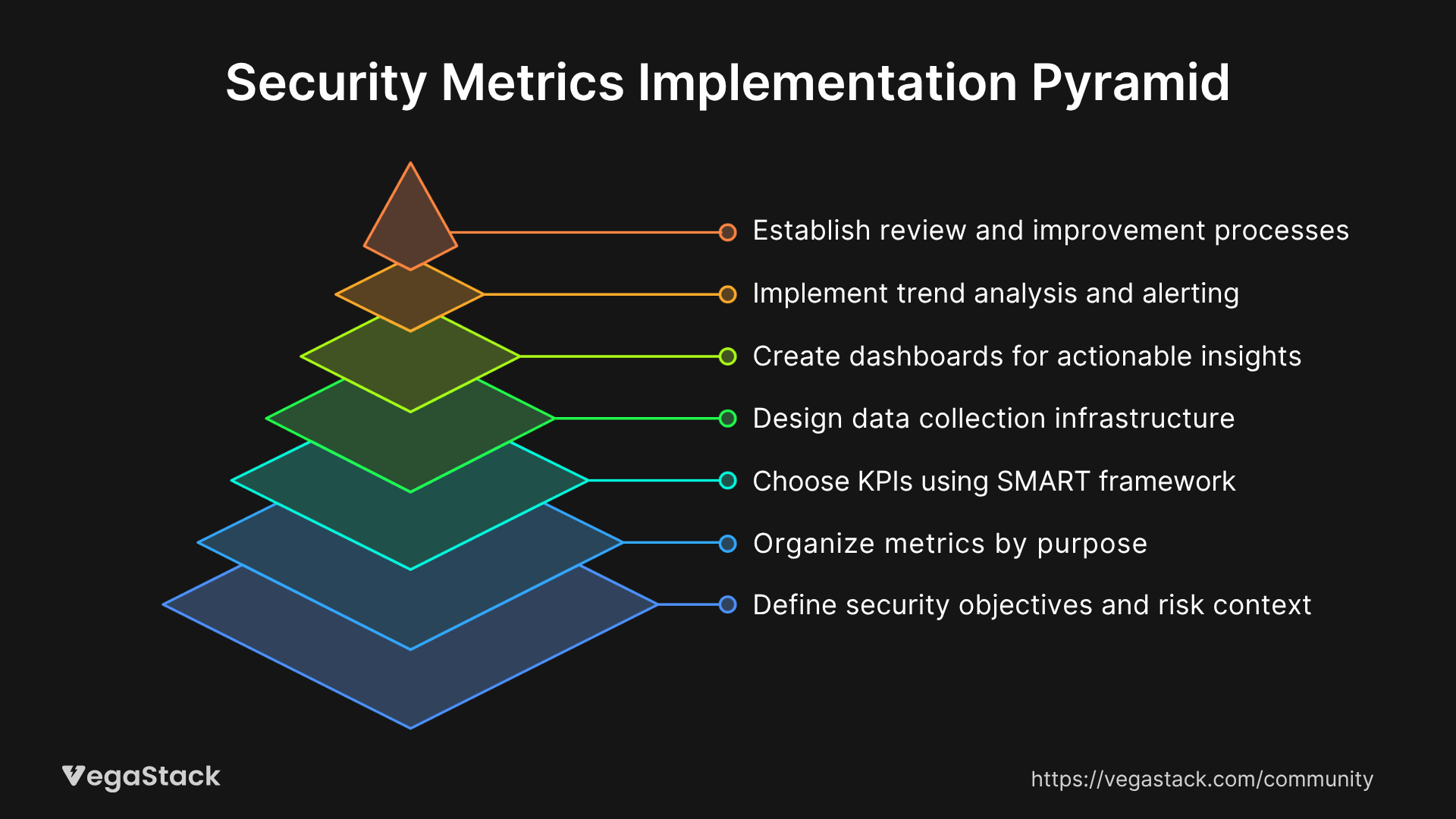

Our approach to security metrics implementation follows a systematic 7-step methodology that transforms scattered security data into actionable intelligence.

Step 1: Define Security Objectives and Risk Context

Before selecting any metrics, we establish clear security objectives aligned with business goals. This isn't about creating a wish list of security improvements, it's about understanding your organization's specific risk profile, compliance requirements, and operational constraints. We work with stakeholders to identify the critical assets that need protection, the threat scenarios that pose the greatest risk, and the potential business impact of security incidents.

Step 2: Categorize Metrics by Purpose

We organize security metrics into 4 distinct categories, each serving a specific purpose. Operational metrics track day-to-day security activities like mean time to detection and incident response times. Strategic metrics measure longer-term security program effectiveness, such as risk reduction trends and security maturity scores. Compliance metrics ensure regulatory requirements are met and maintained. Leading indicators predict future security challenges before they become problems.

Step 3: Select Primary KPIs Using the SMART Framework

Key Performance Indicators must be Specific, Measurable, Achievable, Relevant, and Time-bound. We typically recommend organizations focus on 5-7 primary KPIs rather than trying to track dozens of metrics. Our selection process evaluates each potential KPI against three criteria: does it drive behavior toward better security outcomes, can it be influenced by the security team's actions, and does it provide early warning of emerging problems?

Step 4: Design Data Collection Architecture

Effective security metrics require reliable data collection from multiple sources. We design architectures that automatically aggregate data from security tools, normalize different data formats, and maintain historical trends. This involves establishing data pipelines that can handle the volume and variety of security data while ensuring accuracy and timeliness. The key is building systems that reduce manual effort while maintaining data quality.

Step 5: Create Actionable Dashboards

Dashboard design makes the difference between metrics that inform action and metrics that gather digital dust. We follow visualization principles that highlight exceptions, show trends over time, and provide drill-down capabilities for investigation. Executive dashboards focus on high-level trends and business impact, while operational dashboards provide real-time status and detailed metrics for security teams.

Step 6: Implement Trend Analysis and Alerting

Static metrics provide snapshots, but security improvement requires understanding trends and patterns over time. We implement statistical analysis that identifies meaningful changes versus normal variation, seasonal patterns that affect security metrics, and correlation between different security measures. Automated alerting ensures that significant trends trigger appropriate responses without overwhelming teams with false positives.

Step 7: Establish Review and Improvement Processes

The most sophisticated metrics program fails without regular review and continuous improvement. We establish formal processes for monthly operational reviews, quarterly strategic assessments, and annual program evaluations. These reviews examine not just the metrics themselves but the effectiveness of the metrics program in driving security improvements.

Implementation: Building Effective Security Dashboards

The dashboard creation process represents one of the most challenging aspects of security metrics implementation, primarily because it requires balancing technical accuracy with stakeholder usability.

When designing executive-level security dashboards, we focus on translating technical security metrics into business language. Instead of showing raw vulnerability counts, we present risk exposure trends. Rather than displaying alert volumes, we highlight detection capability improvements. The key insight we've learned is that different stakeholders need different perspectives on the same underlying data.

For operational security teams, dashboards must provide real-time situational awareness while supporting investigation workflows. We implement layered information architecture where high-level status indicators allow quick assessment, but clicking through provides progressively more detailed information. This approach prevents information overload while ensuring necessary details remain accessible.

The technical implementation involves solving data integration challenges across multiple security platforms. We've found that establishing standardized data schemas and implementing robust ETL processes are crucial for maintaining dashboard reliability. The temptation to create quick point-to-point integrations often leads to brittle systems that break when security tools are updated or replaced.

One critical aspect often overlooked is dashboard performance optimization. Security data volumes can be enormous, and poorly designed queries can make dashboards unusably slow. We implement data aggregation strategies, caching mechanisms, and query optimization techniques that ensure dashboards remain responsive even as data volumes grow.

Results and Validation: Measuring Security Metrics Success

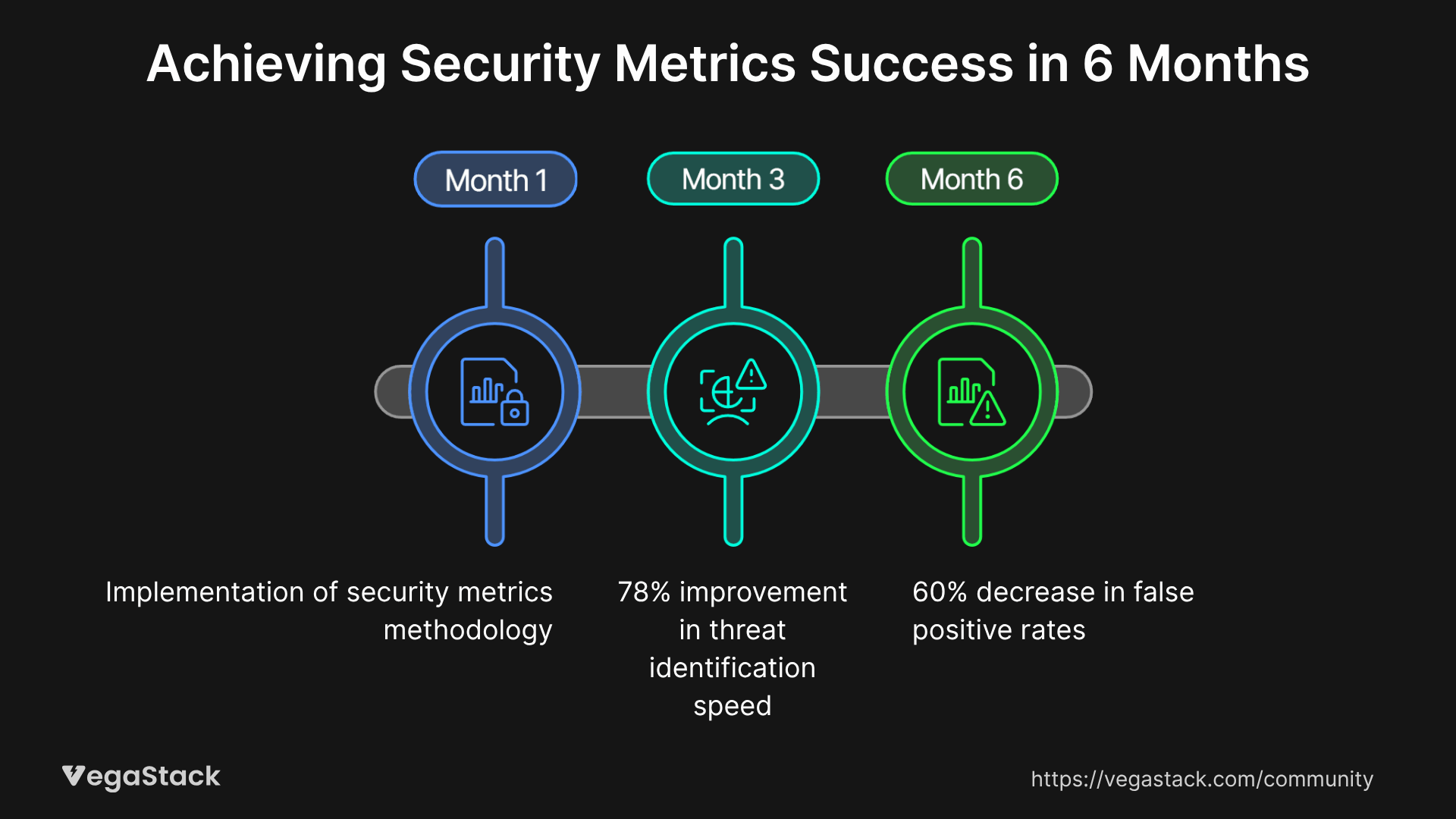

The financial services company we mentioned earlier provides an excellent example of security metrics program success. After implementing our methodology, they achieved several measurable improvements within 6 months.

Their mean time to detection improved from 18 days to 4 days, representing a 78% improvement in threat identification speed. Incident response times decreased from an average of 12 hours to 3 hours, significantly reducing the potential impact of security incidents. Perhaps most importantly, their security team satisfaction scores increased dramatically as they gained visibility into their effectiveness and could demonstrate value to executive leadership.

From a business perspective, the improvements translated into tangible benefits. Their cyber insurance premiums decreased by $3,200 annually due to demonstrated security improvements. Compliance audit preparation time reduced from 40 hours to 12 hours per quarter, saving approximately $2,800 in consultant fees. The security team could reallocate 15 hours per week from manual reporting to proactive security activities.

Technical metrics showed equally impressive improvements. False positive rates from their SIEM decreased by 60% through better tuning informed by metrics analysis. Vulnerability remediation times improved from an average of 45 days to 18 days for critical vulnerabilities. Security tool utilization increased from 30% to 85% as metrics revealed underutilized capabilities.

The limitations of their metrics program also provided valuable insights. Some metrics initially selected proved less useful than anticipated, requiring program adjustments. Certain data sources proved unreliable, necessitating backup collection methods. These challenges reinforced the importance of treating security metrics as an evolving program rather than a one-time implementation.

Key Learnings and Best Practices

Through multiple security metrics implementations, we've identified 6 fundamental principles that determine program success.

Start Small and Expand Gradually: Organizations that try to implement comprehensive metrics programs immediately often struggle with data quality issues and stakeholder confusion. We recommend beginning with 3-4 core metrics, ensuring they provide value, then expanding the program based on lessons learned.

Focus on Leading Indicators: Lagging indicators tell you what happened but don't help prevent future problems. Leading indicators like security tool coverage gaps, patch deployment delays, and training completion rates help identify problems before they become incidents.

Automate Data Collection: Manual data collection doesn't scale and introduces errors that undermine metric credibility. Investing in automation infrastructure pays dividends in data quality and team efficiency.

Align Metrics with Behavior: Every metric should drive specific behaviors toward better security outcomes. If a metric doesn't influence decisions or actions, it's probably not worth tracking.

Validate Metric Accuracy Regularly: Security environments change rapidly, and metrics that were accurate 6 months ago may no longer reflect reality. Regular validation ensures metrics remain reliable indicators of security posture.

Communicate Context with Numbers: Raw metrics without context often mislead stakeholders. Always provide benchmarks, trends, and explanations that help stakeholders understand what metrics mean for organizational security.

Conclusion

Implementing effective security metrics transforms security from a necessary cost center into a strategic capability that demonstrates measurable value. The methodology we've outlined, from defining objectives through continuous improvement, provides a practical framework for building metrics programs that drive real security improvements.

The key to success lies in treating security metrics as a discipline that requires the same rigor as other engineering practices. By focusing on actionable insights rather than vanity metrics, organizations can build security programs that continuously improve and adapt to evolving threats.

As cyber threats become more sophisticated and regulatory requirements more stringent, the ability to measure and demonstrate security effectiveness becomes increasingly critical. The question isn't whether your organization needs better security metrics, it's whether you'll implement them before or after your next security incident forces the conversation.