Slack’s Chef Scaling for Tens of Thousands of Servers

Learn how Slack scaled their Chef infrastructure from a single stack to a sophisticated sharded system managing tens of thousands of EC2 instances. Discover their transformation strategy that eliminated single points of failure and introduced safer deployment practices.

Introduction

Managing infrastructure at scale presents unique challenges that can make or break an organization's operational efficiency. According to the Slack engineering team, their journey from a single Chef infrastructure stack to a sophisticated sharded system managing tens of thousands of EC2 instances offers valuable insights for any organization grappling with rapid infrastructure growth. Their transformation not only eliminated critical single points of failure but also introduced safer deployment practices that prevent system-wide outages from faulty changes.

The scale of Slack's infrastructure is impressive: tens of thousands of EC2 instances hosting Vitess databases, Kubernetes workers, and various application components across Ubuntu and Amazon Linux environments. What makes their story particularly compelling is how they solved the fundamental challenge of provisioning instances and deploying changes safely across such a massive fleet while maintaining high availability and operational efficiency.

The Breaking Point: When Single Infrastructure Stack Becomes a Liability

In Slack's early days, their infrastructure management followed a common pattern that many growing companies will recognize. They operated a single Chef stack consisting of EC2 instances, an AWS application load balancer, an RDS cluster, and an AWS OpenSearch cluster. This setup included three environments- Sandbox, Dev, and Prod with all fleet nodes mapped to one of these environments.

Their deployment process, while functional, revealed critical vulnerabilities as they scaled. The system used a process called DishPig that triggered every hour to upload cookbook changes to the Chef server. When changes were merged to their repository, a CI job would build an artifact, upload it to S3, and notify an SQS queue. DishPig would then download and deploy these changes across all environments simultaneously.

The fundamental problem became clear: all changes deployed across all environments at once meant that any faulty change could disrupt Chef runs and server provisions across their entire infrastructure. This approach created an unacceptable level of risk for a company whose infrastructure was growing rapidly and whose uptime requirements were becoming increasingly stringent.

Additionally, the single Chef stack represented a major single point of failure. Any issues with this stack could impact their entire infrastructure, potentially affecting millions of users who depend on Slack's services daily.

The Strategic Decision: Moving to Sharded Infrastructure

Recognizing that reliability couldn't be compromised as they scaled, Slack's engineering team made the strategic decision to redesign their Chef infrastructure fundamentally. Their goal was twofold: eliminate the single point of failure and create a system that could handle their growth trajectory while improving deployment safety.

The solution involved creating multiple Chef stacks to distribute load and ensure resilience. This approach would allow them to direct new provisions to operational Chef stacks if one failed, maintaining service continuity. However, implementing this strategy introduced several complex challenges that required innovative solutions.

The team also made the strategic decision to separate development and production Chef infrastructure into distinct stacks, strengthening the boundary between environments, a critical consideration for maintaining production stability.

Engineering Solutions: Overcoming Four Critical Challenges

Challenge 1: Intelligent Shard Assignment

The first technical hurdle involved directing new server provisions to specific shards effectively. Slack's solution used AWS Route53 Weighted CNAME records. When an instance spins up, it queries the CNAME record and receives a response based on assigned weights, determining which Chef stack manages that instance.

This approach provided the flexibility needed to balance load across shards while maintaining the ability to redirect traffic when necessary.

Challenge 2: Solving the Service Discovery Problem

Moving to sharded infrastructure broke Slack's existing service discovery mechanism. Previously, teams used Chef search to locate nodes based on criteria like Chef roles in specific AWS regions. With sharded infrastructure, these searches only returned nodes from the queried stack, providing an incomplete view.

Slack's engineering team solved this by leveraging Consul for service discovery, taking advantage of its tagging capabilities. However, this created a circular dependency problem: Consul queries required Nebula (their overlay network) to be configured, but Nebula was configured by Chef.

Their innovative solution used Chef's ruby_block resources, which execute during the converge phase rather than the compile phase. This allowed them to ensure Nebula was installed and configured before processing Consul-dependent resources. They also developed helper functions to replicate Chef search functionality through Consul queries.

Challenge 3: Unified Search Across Shards

To maintain the ability to search across their entire infrastructure, Slack developed Shearch (Sharded Chef Search), a service with an API that accepts Chef queries, runs them across multiple shards, and consolidates results. They also created Gnife (Go Knife), a replacement for the traditional Knife command that works across multiple shards using the Shearch service.

Challenge 4: Coordinated Cookbook Deployment

The final challenge involved deploying cookbooks consistently across multiple Chef stacks. Initially, they modified their existing DishPig system by deploying multiple instances, each dedicated to a specific stack. However, this approach led to consistency issues where different stacks could end up with different cookbook versions.

The Game-Changer: Introducing Chef Librarian

Recognizing the limitations of their interim solution, Slack developed Chef Librarian, a comprehensive replacement for DishPig that addressed cookbook versioning and environment management across multiple Chef stacks.

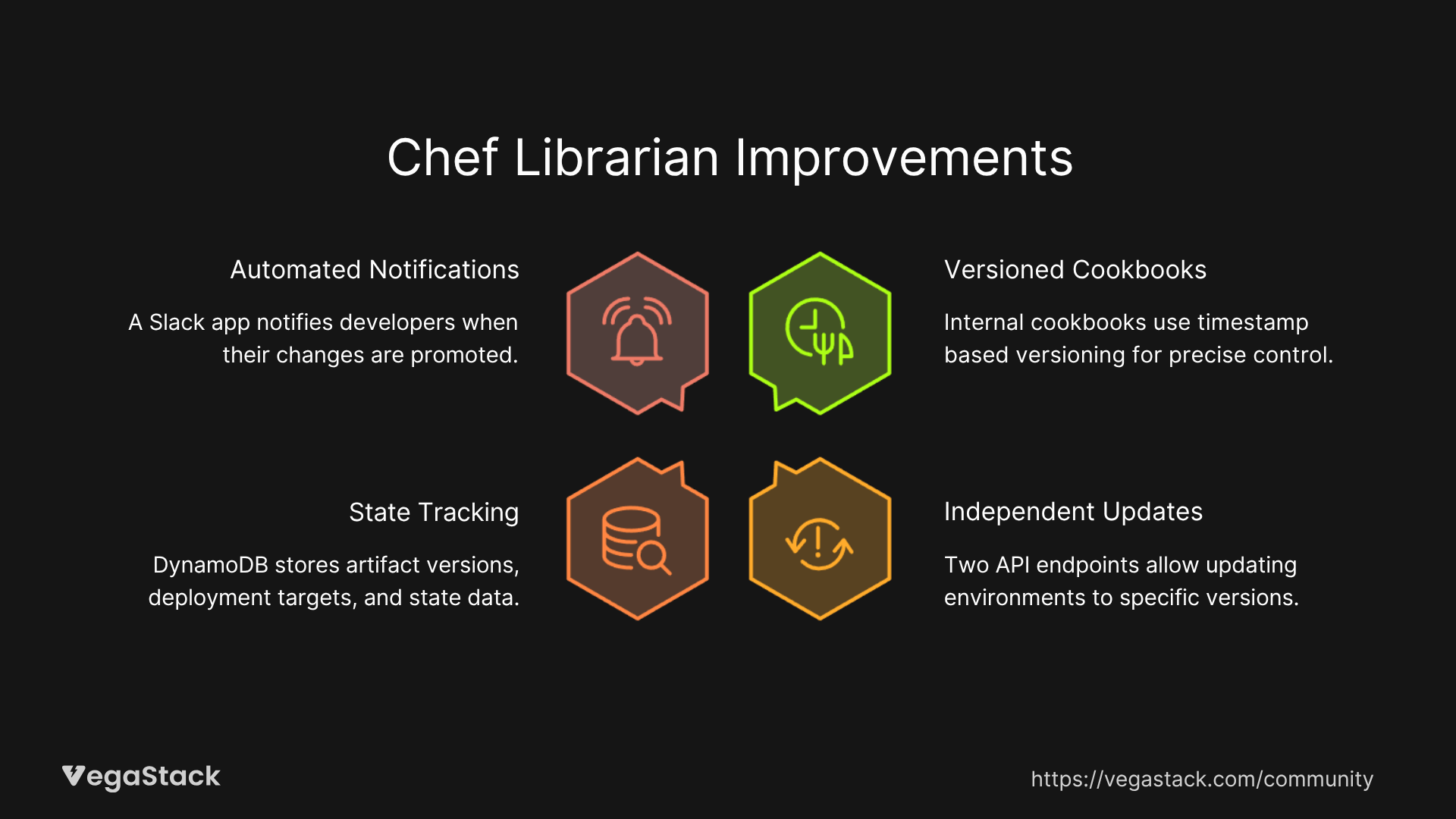

Chef Librarian introduced several key improvements:

- Versioned Cookbooks: Internal cookbooks now use timestamp-based versioning (YYYYMMDD.TIMESTAMP.0), enabling precise version control

- Independent Environment Updates: Two API endpoints allow updating environments to specific versions or matching versions between environments

- State Tracking: DynamoDB storage tracks artifact versions, deployment targets, and state information

- Automated Notifications: A Slack app notifies developers when their changes are promoted, identifying users through Git commits

The system enables a safer deployment pipeline where changes first go to sandbox and development environments for monitoring before production promotion. This staged approach prevents problematic changes from impacting all environments simultaneously.

Impressive Results: Enhanced Safety and Scalability

The transformation to sharded Chef infrastructure delivered significant improvements across multiple metrics:

Reliability Improvements:

- Eliminated single point of failure that previously threatened entire infrastructure

- Enabled continued operations even when individual Chef stacks experience issues

- Reduced blast radius of faulty changes through staged deployments

Operational Efficiency:

- Maintained service discovery capabilities across distributed infrastructure

- Preserved familiar tooling experience while working across multiple shards

- Automated deployment notifications keep developers informed of change status

Deployment Safety:

- Staged deployment process catches issues before production impact

- Manual override capabilities allow blocking problematic promotions

- Comprehensive tracking provides visibility into deployment pipeline

Scalability Foundation:

- Infrastructure design supports continued growth without architectural changes

- Load distribution across multiple stacks prevents performance bottlenecks

- Flexible shard assignment adapts to changing capacity requirements

Key Lessons for Infrastructure Teams

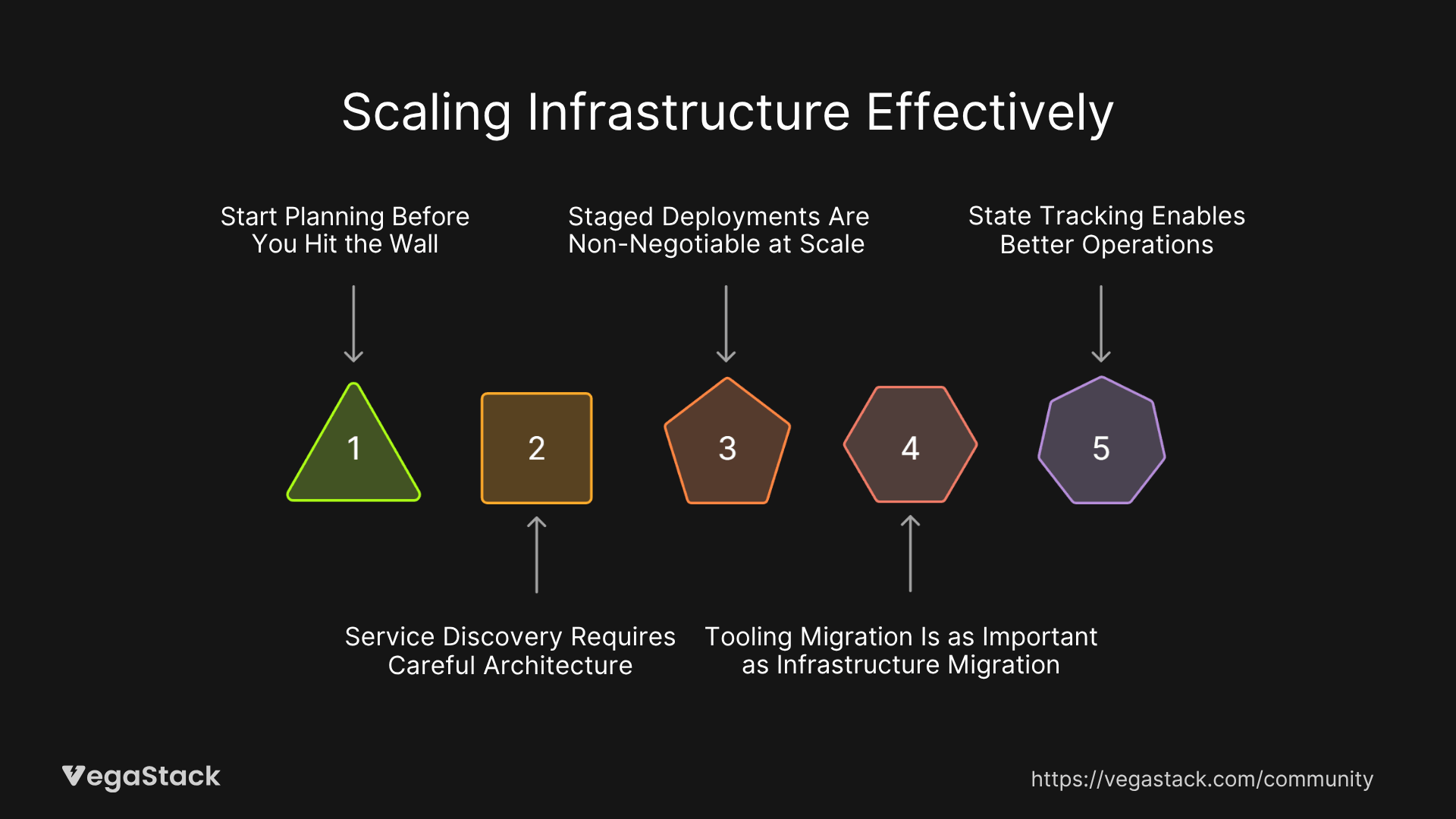

Slack's transformation offers several valuable insights for organizations facing similar infrastructure scaling challenges:

Start Planning Before You Hit the Wall: Single infrastructure stacks work until they don't. Planning the transition to distributed infrastructure before crisis hits allows for more thoughtful architecture decisions.

Service Discovery Requires Careful Architecture: Moving from centralized to distributed infrastructure breaks existing service discovery patterns. Plan for alternative discovery mechanisms early in the design process.

Staged Deployments Are Non-Negotiable at Scale: The ability to test changes in non-production environments before full rollout becomes critical as infrastructure grows. Build this capability into your deployment pipeline from the start.

Tooling Migration Is as Important as Infrastructure Migration: Users need familiar interfaces even as underlying architecture changes. Invest in maintaining user experience during infrastructure transitions.

State Tracking Enables Better Operations: Comprehensive tracking of what's deployed where dramatically improves debugging and rollback capabilities.

Looking Forward: The Next Evolution

Slack's engineering team isn't stopping with their current improvements. They're exploring further segmentation of Chef environments, potentially breaking down production environments by AWS availability zones to enable even more granular change control.

They're also investigating Chef PolicyFiles and PolicyGroups, which would represent a significant architectural shift but could offer greater flexibility and safety for deploying changes to specific nodes.

The scale of these potential changes reflects the ongoing evolution required when managing infrastructure at Slack's scale, a reminder that infrastructure architecture is never truly "finished" but continues evolving with organizational needs.

For organizations currently managing growing infrastructure, Slack's journey demonstrates that proactive architectural evolution can prevent scaling crises while maintaining operational stability. The key is recognizing when current approaches won't scale and investing in the architectural changes needed for the next phase of growth.