How Stripe Solved Hourly DNS Failures and Cut Query Traffic by 86% Using Smart Load Distribution

Discover how Stripe eliminated hourly DNS failures and reduced query traffic by 86% through smart load distribution. Learn their proven DNS optimization strategies, load balancing techniques, and reliability improvements. Get practical insights for building resilient DNS infrastructure at scale.

Introduction

Every hour on the hour, Stripe's DNS infrastructure was failing. For several critical minutes, their DNS servers were returning SERVFAIL responses for internal requests, creating the kind of intermittent outage that makes engineers lose sleep and business leaders question system reliability.

The culprit? A seemingly innocent Hadoop job that was generating massive spikes in reverse DNS lookups, overwhelming their AWS VPC resolver with 7x amplified traffic that exceeded critical rate limits. According to the Stripe engineering team, this hourly disruption was affecting their entire DNS infrastructure, which serves as the foundational layer for all their payment processing communications.

Through methodical investigation using packet capture analysis, custom monitoring tools, and strategic load redistribution, Stripe's team identified the root cause and implemented a solution that eliminated the failures while reducing DNS query traffic by 86%. Their approach offers valuable lessons for any organization managing complex DNS infrastructure at scale.

The High-Stakes Problem: When DNS Fails, Everything Fails

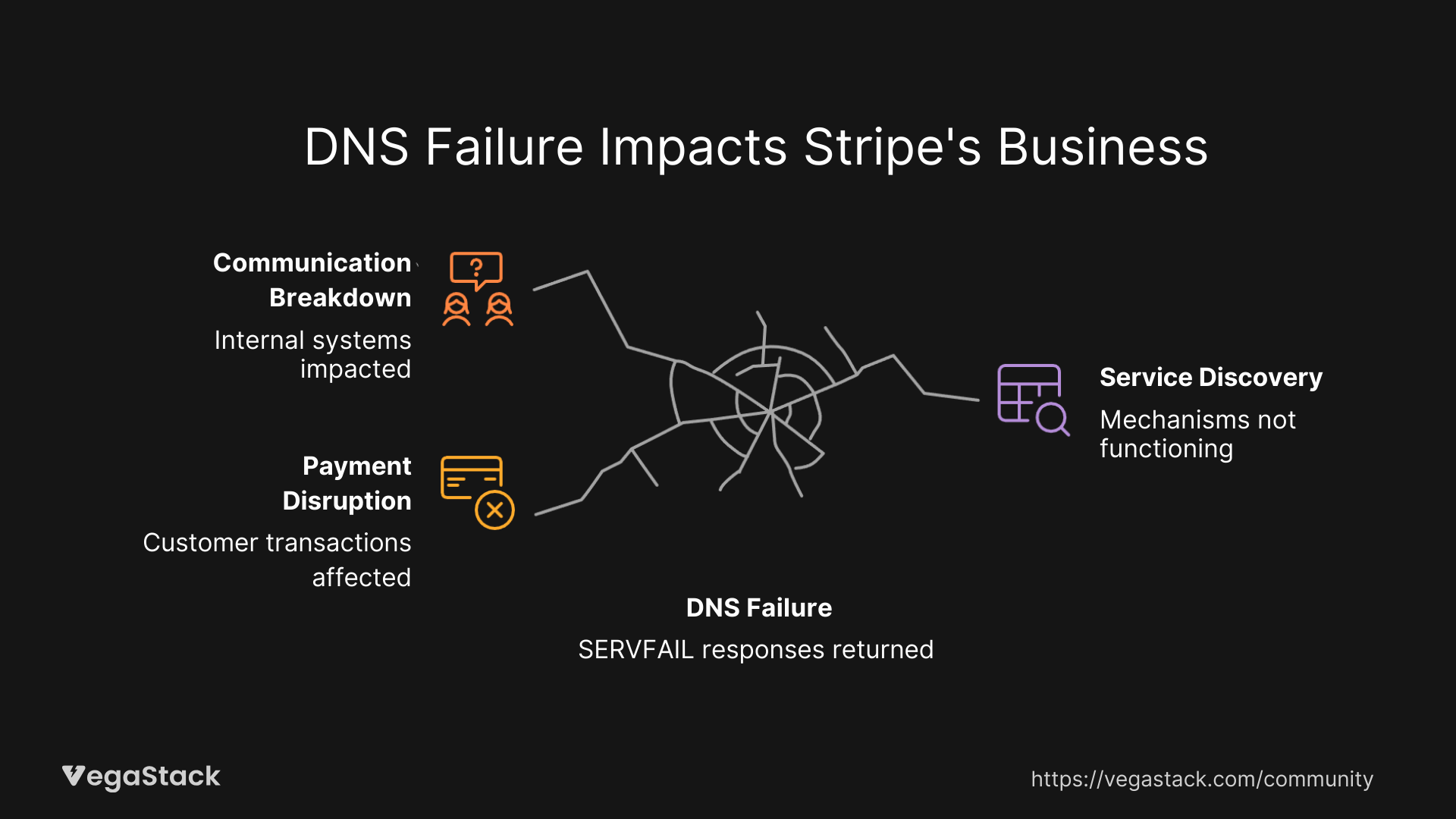

DNS infrastructure is often invisible until it breaks, and when it breaks, the impact cascades through every system that depends on network communication. For a company like Stripe, processing billions of dollars in payments, DNS reliability isn't just a technical concern; it's a business-critical requirement.

The symptoms appeared deceptively simple: every hour, for several minutes, their DNS cluster would return SERVFAIL responses for a small percentage of internal requests. SERVFAIL is DNS's equivalent of a generic error message, it tells you something went wrong, but provides no insight into the underlying cause.

What made this particularly challenging was the intermittent nature of the problem. The failures weren't constant, they weren't tied to obvious load spikes, and the affected queries represented only a small percentage of total traffic. Yet even a small percentage of DNS failures can cause significant downstream effects, potentially impacting payment processing, internal communications, and service discovery mechanisms.

The business stakes were clear: DNS issues can quickly escalate into widespread outages, affecting customer transactions and revenue. For Stripe's engineering team, this wasn't just a technical puzzle to solve, it was a ticking time bomb that needed swift resolution.

The Detective Work: Following the Clues Through Network Traffic

The investigation began with what the Stripe team had: metrics showing periodic spikes in SERVFAIL responses and an increase in Unbound's request list depth metric. Think of this metric as DNS's internal to-do list, when it grows, it means the system can't keep up with incoming requests.

Initially, the obvious suspects didn't pan out. Query volume wasn't significantly higher during problem periods, and system resources weren't hitting limits. This led the team to investigate whether upstream DNS servers were responding slowly, causing the bottleneck.

Using Unbound's diagnostic tools, they discovered that most problematic requests were reverse DNS lookups (PTR records) waiting for responses from their AWS VPC resolver at IP address 10.0.0.2. This was the first concrete clue pointing toward a specific component in their DNS chain.

The breakthrough came through strategic packet capture analysis. The team used tcpdump to collect DNS traffic data in 60-second intervals over 30 minutes, timing the collection to capture one of the hourly spikes. This approach yielded gold: during spike periods, 90% of requests to the VPC resolver were reverse DNS queries for IPs in the 104.16.0.0/12 CIDR range, and the vast majority were failing with SERVFAIL responses.

By correlating the timing of these spikes with their internal job scheduling database, they traced the source to a single Hadoop job that analyzes network activity logs and performs reverse DNS lookups on IP addresses found in those logs.

The Root Cause: Traffic Amplification Meets Rate Limits

The most revealing discovery came from deeper packet analysis. During one 60-second collection period, Stripe's DNS server sent 257,430 packets to the AWS VPC resolver but received only 61,385 responses back, a response rate of just 24%. The VPC resolver was responding at exactly 1,023 packets per second, suspiciously close to AWS's documented limit of 1,024 packets per second per network interface.

This revealed a perfect storm of cascading failures:

- The Trigger: A Hadoop job performing reverse DNS lookups on network logs

- The Bottleneck: AWS VPC resolver rate limiting at 1,024 packets per second

- The Amplification: Failed lookups caused clients to retry requests 5 times, while DNS servers also retried, creating 7x traffic amplification

- The Cascade: Accumulated failures led to SERVFAIL responses affecting other DNS queries

The team confirmed their hypothesis by creating custom monitoring using iptables rules to track packets sent to the VPC resolver. This simple but effective solution involved creating an iptables rule matching traffic to the VPC resolver IP and monitoring the packet count through their metrics pipeline.

The Solution: Strategic Load Distribution and Zone-Based Forwarding

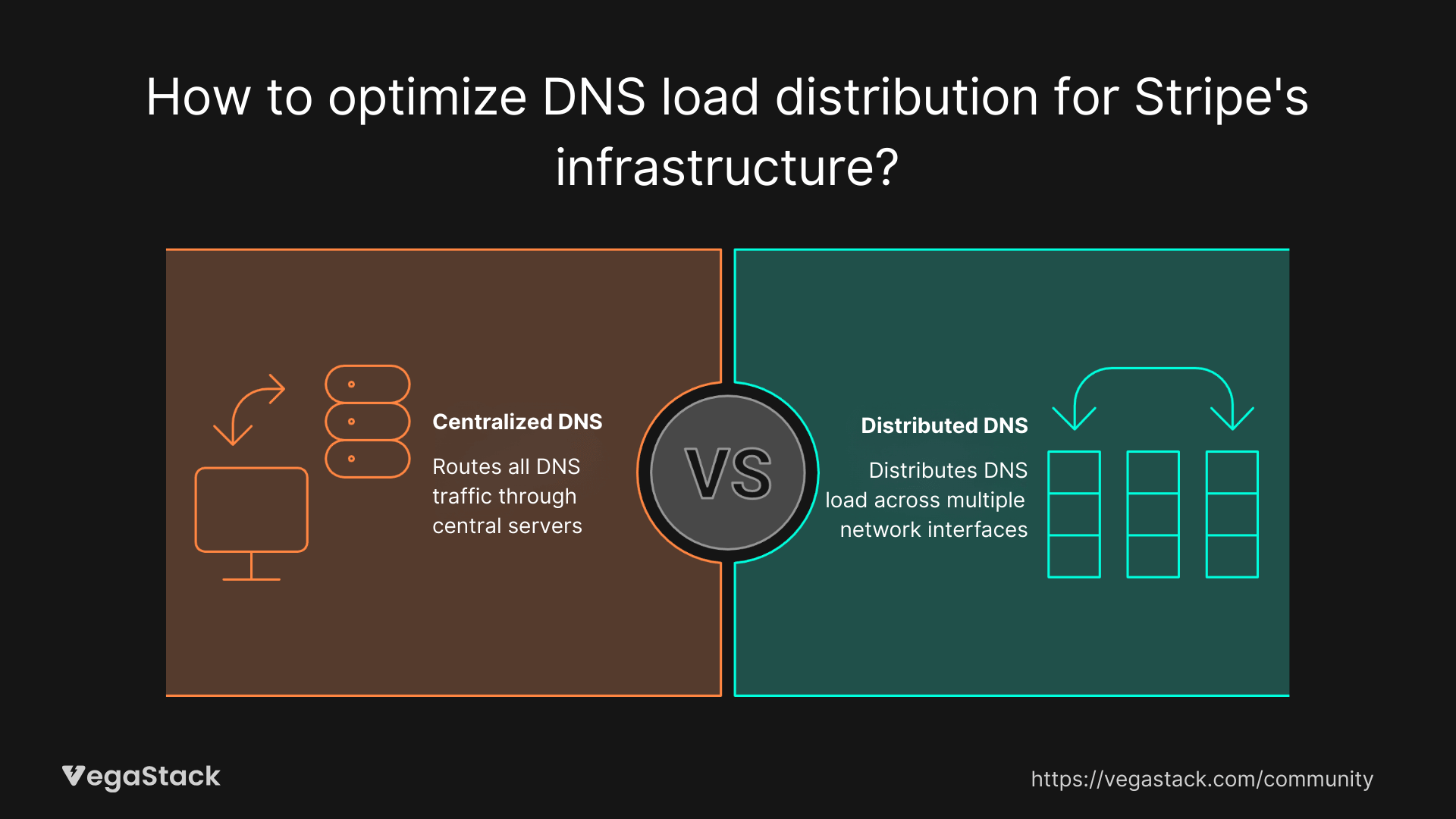

Rather than trying to optimize the problematic Hadoop job or requesting higher AWS limits, Stripe's team chose an elegant architectural solution: distribute the DNS load across multiple network interfaces instead of centralizing it through their DNS servers.

The key insight was that AWS's 1,024 packets-per-second limit applies per network interface. By having individual hosts contact the VPC resolver directly instead of routing everything through their central DNS servers, they could effectively multiply their available capacity.

Using Unbound's flexible forwarding configuration, they implemented zone-based routing:

- Private address lookups (10.in-addr.arpa zone): Forwarded to VPC resolver with optimized timeouts

- Public address lookups (.in-addr.arpa zone): Also forwarded to VPC resolver but with separate timeout calculations

This approach leveraged Unbound's intelligent retry timeout system, which calculates delays based on smoothed averages of historical response times. By creating separate forwarding rules, they ensured that slow public DNS lookups wouldn't affect the timeout calculations for faster private address lookups.

Implementation: Turning Theory Into Results

The implementation was remarkably straightforward, a testament to good architectural design. The team modified the Unbound configuration on Hadoop nodes to include the new forwarding rules for reverse DNS queries. This change required no application modifications and minimal infrastructure changes.

The configuration change immediately eliminated the hourly load spikes to the VPC resolvers. By distributing the reverse DNS load across multiple network interfaces (one per Hadoop node), they stayed well within AWS rate limits while maintaining the same lookup functionality.

The team also implemented permanent monitoring improvements, including the iptables-based packet counting system that provides real-time visibility into VPC resolver utilization. This monitoring gives them early warning if they approach rate limits and helps identify the source of DNS performance issues.

Business Impact: Measurable Improvements Across the Board

The results were immediate and substantial:

- 86% reduction in DNS query traffic to central servers during peak periods

- 100% elimination of hourly SERVFAIL spikes

- Zero application changes required for implementation

- Improved system resilience through load distribution

- Enhanced monitoring capabilities for future incident prevention

Beyond the immediate technical fixes, the solution provided several business benefits:

- Reduced risk of DNS-related outages affecting payment processing

- Improved incident response capabilities through better monitoring

- Enhanced system understanding for future architectural decisions

- Cost efficiency by working within AWS limits rather than requesting expensive upgrades

The distributed approach also improved overall system resilience. Instead of having a single point of potential rate limiting, DNS queries are now distributed across multiple paths, reducing the risk of similar cascading failures.

Key Lessons: What This Means for Complex Infrastructure

Stripe's DNS investigation offers several transferable insights for organizations managing complex technical infrastructure:

Start with comprehensive monitoring: The investigation was only possible because Stripe had robust metrics collection from Unbound. Without baseline visibility into system behavior, identifying anomalies becomes nearly impossible.

Use multiple diagnostic approaches: The solution emerged from combining metrics analysis, packet capture, command-line tools, and custom monitoring. Each provided a different piece of the puzzle that wouldn't have been visible through any single method.

Consider cascading effects: The original problem wasn't just about one slow Hadoop job, it was about how that job's behavior cascaded through retry logic, rate limits, and shared infrastructure to affect unrelated systems.

Design for distribution: Centralized systems create single points of failure and bottlenecks. The solution worked because it distributed load across multiple paths rather than trying to optimize a single chokepoint.

Implement monitoring while solving: The team didn't just fix the immediate problem; they improved their monitoring capabilities to prevent similar issues and respond more quickly to future incidents.

Looking Forward: Building Resilient DNS Infrastructure

Stripe's experience demonstrates that even well-architected systems can develop unexpected failure modes as they scale and evolve. The key to managing this complexity lies in building comprehensive observability, understanding system interactions, and designing for graceful degradation.

The team continues to enhance their DNS monitoring with additional improvements like rolling packet captures and periodic logging of debugging information. These investments in observability pay dividends when the next unexpected issue arises.

For organizations building or maintaining DNS infrastructure, the lesson is clear: the time to build monitoring and understand your system's behavior is before problems occur, not during an outage. The tools and techniques that Stripe used, from packet capture to custom iptables monitoring, are accessible to any team willing to invest in understanding their infrastructure.